Continuous Integration and Continuous Deployment (CI/CD) in Google Cloud Platform (GCP) can be incredibly powerful – but only if it’s set up right.

The promise is simple: every commit gets tested, packaged, and deployed automatically, without downtime. But the reality often looks different. Pipelines break, secrets leak, deployments overwrite production, or rollbacks take hours instead of seconds.

Over the years, I’ve reviewed countless GCP pipelines and noticed the same mistakes repeating. They don’t just slow teams down – they erode trust in automation, leading to manual workarounds and late-night firefighting.

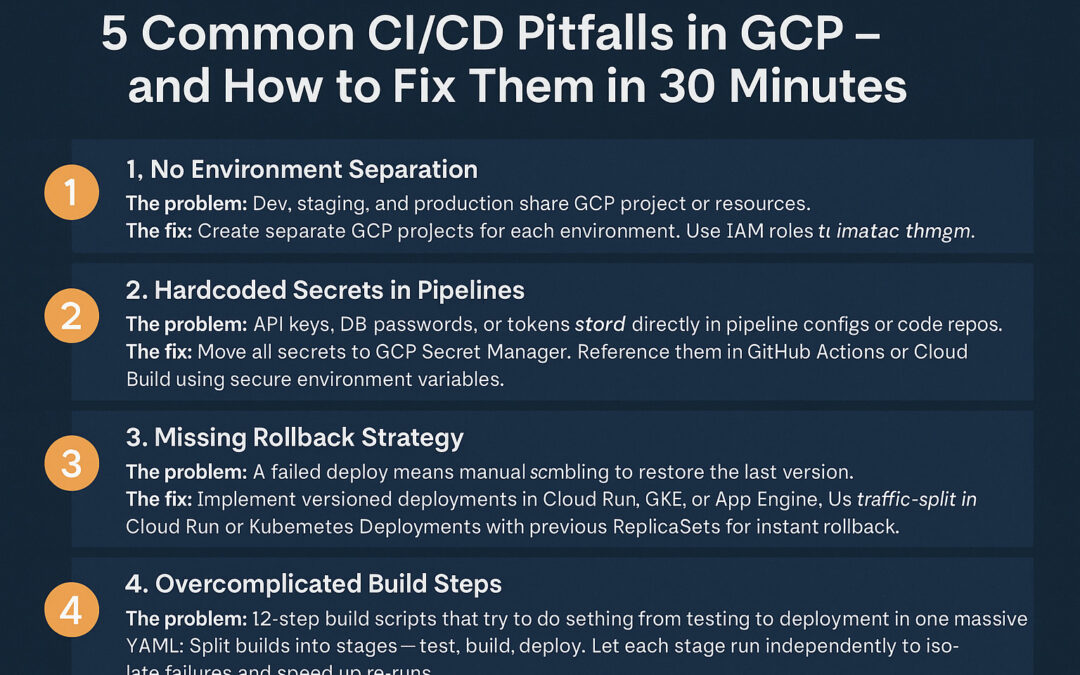

Here are five of the most common pitfalls I see in GCP CI/CD setups – and quick ways to fix them, usually in under 30 minutes.

1. No Environment Separation

The problem:

It’s surprisingly common to see Dev, Staging, and Production share the same GCP project. At first, it feels convenient: one billing account, one set of credentials, one dashboard. But then someone pushes a “test” deployment that overwrites production, or an engineer accidentally deletes a resource that was shared across environments.

This isn’t just a productivity risk – it’s a business continuity issue.

The fix (10 min):

- Create separate GCP projects for each environment. This creates natural isolation for billing, IAM, quotas, and API keys.

- Enforce access control: Developers may get Owner/Editor rights in Dev, but only read-only access in Production.

- Manage configs with Terraform workspaces: For example:

provider "google" {

project = terraform.workspace == "prod" ? "myapp-prod" : "myapp-dev"

}

This way, the same Terraform code manages all environments, but with safe boundaries.

✅ Bonus tip: Prefix resources with env names (e.g., prod-db, staging-db) to avoid accidental cross-environment usage.

2. Hardcoded Secrets in Pipelines

The problem:

I still see DB_PASSWORD=supersecret sitting in GitHub Actions YAML or Cloud Build configs. Sometimes even committed to Git repos! This is a security nightmare: leaked credentials, production downtime, or even data exfiltration if repos go public.

The fix (5 min):

- Use GCP Secret Manager to store sensitive values.

- Reference them securely in pipelines. Example for Cloud Build:

steps:

- name: "gcr.io/cloud-builders/gcloud"

entrypoint: "bash"

args:

- "-c"

- |

DB_PASS=$(gcloud secrets versions access latest --secret="db_password")

export DB_PASS

./run-migrations.sh

- For GitHub Actions, use Workload Identity Federation to avoid long-lived service account keys.

✅ Bonus tip: Rotate secrets automatically. GCP Secret Manager supports versioning, so you can roll credentials without pipeline downtime.

3. Missing Rollback Strategy

The problem:

Deploy goes live, users report errors, panic mode starts. The team scrambles to “undo” the deployment, often by manually pushing the last Docker image or reverting Git commits. This wastes precious minutes – sometimes hours – and increases downtime.

The fix (15 min):

- Use versioned deployments:

- Cloud Run keeps all revisions. Rollback is just:

gcloud run services update-traffic myservice --to-revisions <REVISION>=100 - Kubernetes (GKE) keeps ReplicaSets of older versions. Rollback with:

kubectl rollout undo deployment myapp - App Engine has built-in traffic splitting and rollback options.

- Cloud Run keeps all revisions. Rollback is just:

- Automate rollback steps in your pipeline so they’re one command away.

✅ Bonus tip: Set an automatic canary with 5–10% traffic on new versions. If metrics drop, roll back instantly without user impact.

4. Overcomplicated Build Steps

The problem:

Pipelines often start simple, then grow into a 400-line YAML monster: run tests, lint, build Docker, run DB migrations, deploy to 3 environments, run e2e tests – all in one job. One failure means re-running everything, costing time and cloud credits.

The fix (5 min):

- Break pipelines into stages:

- Test → unit + lint

- Build → Docker image

- Deploy → environment-specific rollout

- Use Cloud Build substitutions or GitHub Actions jobs for modularity.

Example (GitHub Actions):

jobs:

test:

runs-on: ubuntu-latest

steps: [...run tests...]

build:

needs: test

steps: [...build & push docker...]

deploy:

needs: build

steps: [...deploy to GCP...]

✅ Bonus tip: Cache dependencies (npm, Maven, pip) between runs to cut minutes from build time.

5. No Pre-Deployment Smoke Tests

The problem:

Code goes straight to production without a final “is it alive?” check. Sometimes the app is completely broken – because a health check endpoint was forgotten or a DB migration failed silently. Users often discover the issue before engineers do.

The fix (10 min):

- Add a smoke test step in the pipeline before traffic switches.

- Example:

steps:

- name: "gcr.io/cloud-builders/curl"

args: ["https://$SERVICE_URL/healthz"]

- Run lightweight functional checks: can you log in, query the DB, or hit a key API route?

- Automate failure rollback if smoke tests fail.

✅ Bonus tip: Use GCP Cloud Monitoring synthetic checks to continuously hit critical endpoints, even outside deployment windows.

Wrapping Up

CI/CD in GCP doesn’t have to be fragile. By avoiding these five pitfalls, you’ll:

- reduce risk of accidental outages,

- keep secrets safe,

- recover from bad deploys in seconds,

- speed up builds, and

- gain confidence that what goes live is actually working.

The goal is simple: make deployments boring. That’s the highest compliment you can give your pipeline.